The Screening Machine

College and University admissions departments are faced annually with the challenge of sorting though what are often many thousands of applications for what are significantly less available positions in the incoming freshman class.

In fact, establishing and maintaining a low percentage of 'accepted' applicants, or conversely a high percentage of rejected applicants, is perceived as a sign of an institution's selectivity, quality, and impacts positively on those 'Best Colleges' lists that are extremely important to college administrators, current and prospective students, parents, and alumni.

It's kind of a virtuous cycle - improved institutional reputation --> more applications --> greater selectivity --> higher ranking on the lists --> improved reputation. And so on. Mix in a successful sports team once in a while, and the school is on its way to more donations, more research grants, and a spot on the Presidential debate hosting roster.

It's all good except for the folks in the admissions departments that are tasked with most of the work in awarding these highly sought after slots in the incoming class to the select 10% or even less of the applicants that will make the cut. And as most of us who remember the college application process and experience, either our own or our kids, the applications are complex, long, and contain a complicated mix of standard measures (SAT tests), sort of standard measures (comparative GPA's), and completely non-standard and subjective measures (essays, recommendations, after-school activities).  The Admitulator

The Admitulator

All in all, a difficult recruiting and 'hiring'process, not that unlike what happens in corporate recruiting every day. Companies often have hundreds of applications for a position, the evaluation criteria is a mixture of standard (advanced degrees), and non-standard (impressions left after an interview), and making the right selection has important and long-term impacts on the organization. In both college admissions and corporate recruiting, making the 'right' choice isn't easy.

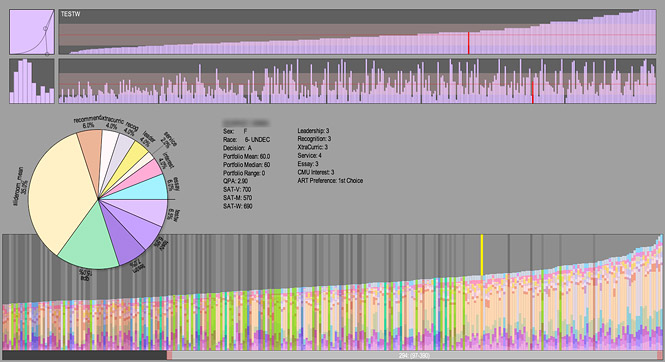

That is why I thought a new, experimental program called the 'Admitulator' looks so interesting. From the copy of the tool's designer Golan Levin:

The Admitulator is a custom tool for quantitatively evaluating university applicants according to a diverse array of weighted metrics. A pie chart is the core interface for ranking, sorting and evaluating applicants; it allows faculty with different admissions priorities to explore and negotiate different balances between applicant features (such as e.g. portfolio scores, standardized test scores, grade point averages, essay evaluations, etcetera).

By attempting to reconcile both the wide variability of prospective student's achievements, capabilities, and potential with the selector's differences and biases in their beliefs in what makes a successful student, (and one that contributes to the overall institution in a positive way), a tool like the Admitulator has the potential to really inform the college about its admission practices, decision processes, and their relative success or failure.

The tool doesn't 'tell' the college which students to admit, but rather provides insight as to the impact biases and widely-held (but never tested) beliefs in the admission process have on the composition of the incoming class. Then, when later applied to the academic results of students admitted in this manner, it can help educate the admissions and faculty groups as to what screening metrics are more likely to identify successful students in the longer term.

It is kind of a neat tool.

We all have our biases and preferences in the hiring process - advanced degrees trump skills (or vice versa), we only hire from certain colleges, or we only like to poach from a select few competitors. But do we really know the impact of these biases? Do we know if there is a correlation between what we 'feel' and what actually happens?

Do we need a big, bad screening machine?

Steve

Steve