A reminder to evaluate the work, not just the person doing the work

Here's a super interesting story from the art world that I spotted in the New York Times and is titled The Artwork Was Rejected. Then Banksy Put His Name To It.

The basics of the story, and they seem to be undisputed, are these:

1. The British Royal Academy puts on an annual Summer Exhibition or Art, and anyone is allowed to submit a piece of art for consideration to be included in the exhibition.

2. The anonymous, but incredibly famous, artist Banksy submitted a painting, but under a (different) pseudonym - 'Bryan S. Gaakman' - which is an anagram for 'Banksy anagram'.

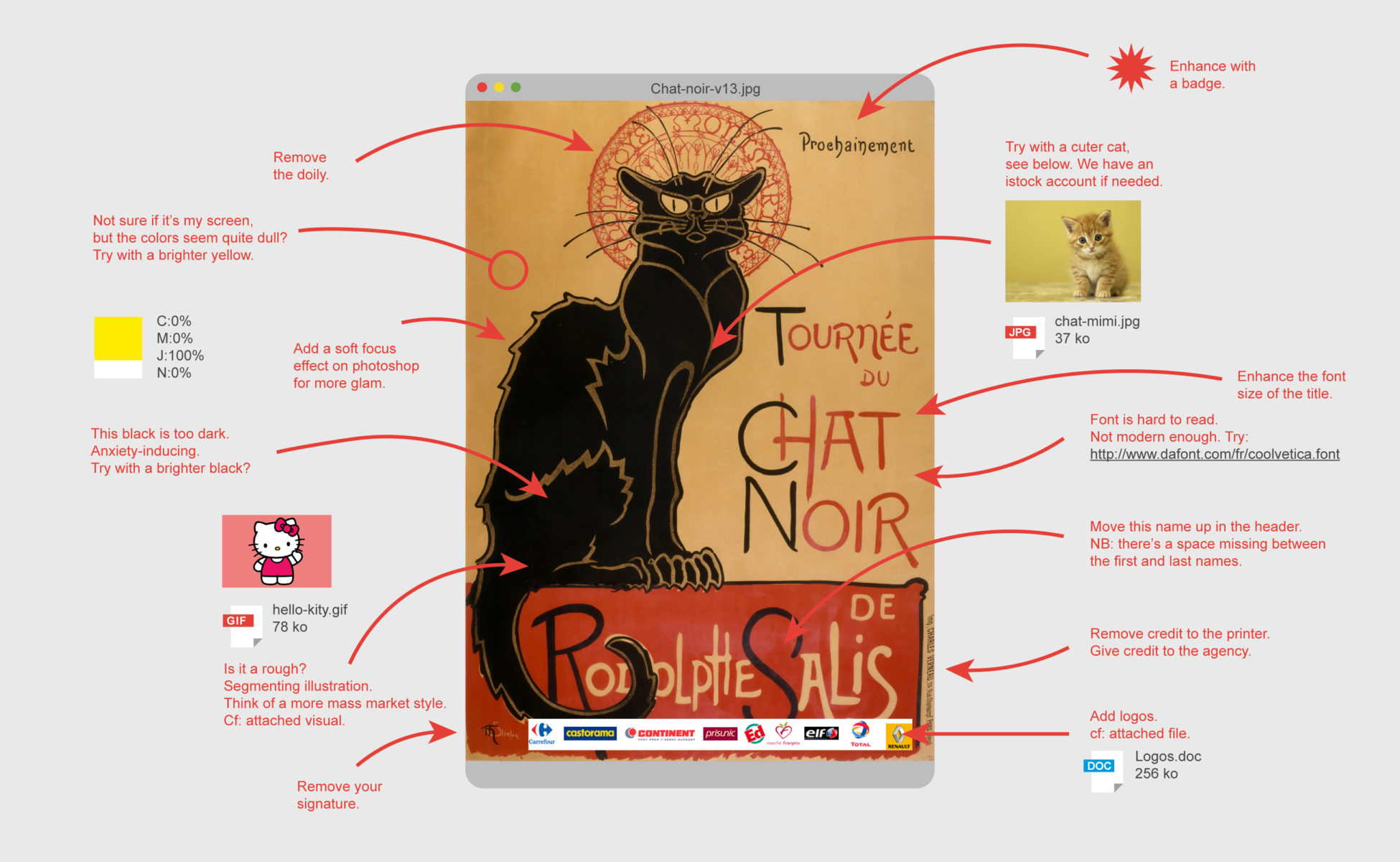

3. 'Gaakman's' submission was declined inclusion in the exhibit by the event's judges.

4. One of the event's judges, contacted Banksy (how one contacts Banksy was not fully explained), to inquire if the famous artist had a submission for the exhibit. This judge did not know that 'Gaakman' was actually Banksy.

5. Banksy submitted a very slightly altered version of the 'Gaakman' piece to the exhibit - and was accepted for the show. Basically, the same art from 'unknown artist' was declined, but for the famous Banksy it was deemed worthy.

What can we take away from this little social experiment? Three things at least.

1. We always consider 'who' did the work along with the work itself, when assessing art, music, or even the weekly project status report. We judge, at least a little, on what this person has done, or what we think they have done, in the past.

2. Past 'top' or high performers always get a little bit of a break and the benefit of the doubt. It happens in sports, when close calls usually go in favor of star players, and it happens at work, where the 'best' performers get a little bit more room when they turn in average, or even below average work. They have 'earned' a little more wiggle room that newer, or unproven folks. This isn't always a bad thing, but it can lead to bad decisions sometimes.

3. What we want, as managers, is good, maybe even great 'work'. But what the organization needs is great 'performers'. Great performers don't always do great work, but over time their contributions and results add up to incredible value for the organization. So in order to ensure that the organization can turn great 'work' into great (and sustainable) long-term performance, every once in a while less than great work, turned in by a great performer, needs to get a pass. Take the long view if you know what I mean.

That's it for me - have a great day!

Steve

Steve